more on dataset IDs

STREAMS' dataset IDs have been already brought up in this place. In order to increase the understanding of the underlying concept we would like to go a bit into detail.

The near real-time database (which serves STREAMS) relies on a postgres extension called timescaledb. It stores columnar/row data and creates continuous aggregates of numeric data. This allows (pre-aggregated) data to be queried very flexible with instant delivery. For more technical backgrounds the company behind timescaledb described their technical approach in a white paper. So, the database is very powerful, nonetheless it still needs to be used properly. Otherwise details can ruin your day.

Let's say there is a datastream available for vessel:loveboat:tsg:sbe38:temperature (SBE38 thermometer installed in a TSG and mounted to the MS Loveboat measuring temperature). All data for that specific stream is stored in a data table. One technical constraint is in the nature of time series. There simply cannot be more than one measured value per time for a certain data table. From the daily life of a data manager and thru the eyes of the database it simply makes no difference if there is already data available in the data table for that point in time or if ingested data looks like this:

bash

datetime par1 par2 par3 par4 par5

2026-02-02T19:08:20 203 14 167 5 13801

2026-02-02T19:08:20 274 28 266 8 22257

2026-02-02T19:08:20 304 74 100 1 11648If there was no such data available before the first row would be possible to ingest (theory !!!), but since the other rows are ingested with the same file this would fail. Doing so would result in a client error. In this case the server returns a detailed answer what's wrong.

json

{

'status': 409,

'reason': {'status': 'CONFLICT',

'message': "Multiple values for one stream at the same time are not supported. At least a value for the stream 'station:verytheoretical:data:parameter_xyz with stream id 0815 and datetime '2024-01-01T01:00' already exists in dataset with id 4902307. Correct your dataset or consider deleting the existing dataset.",

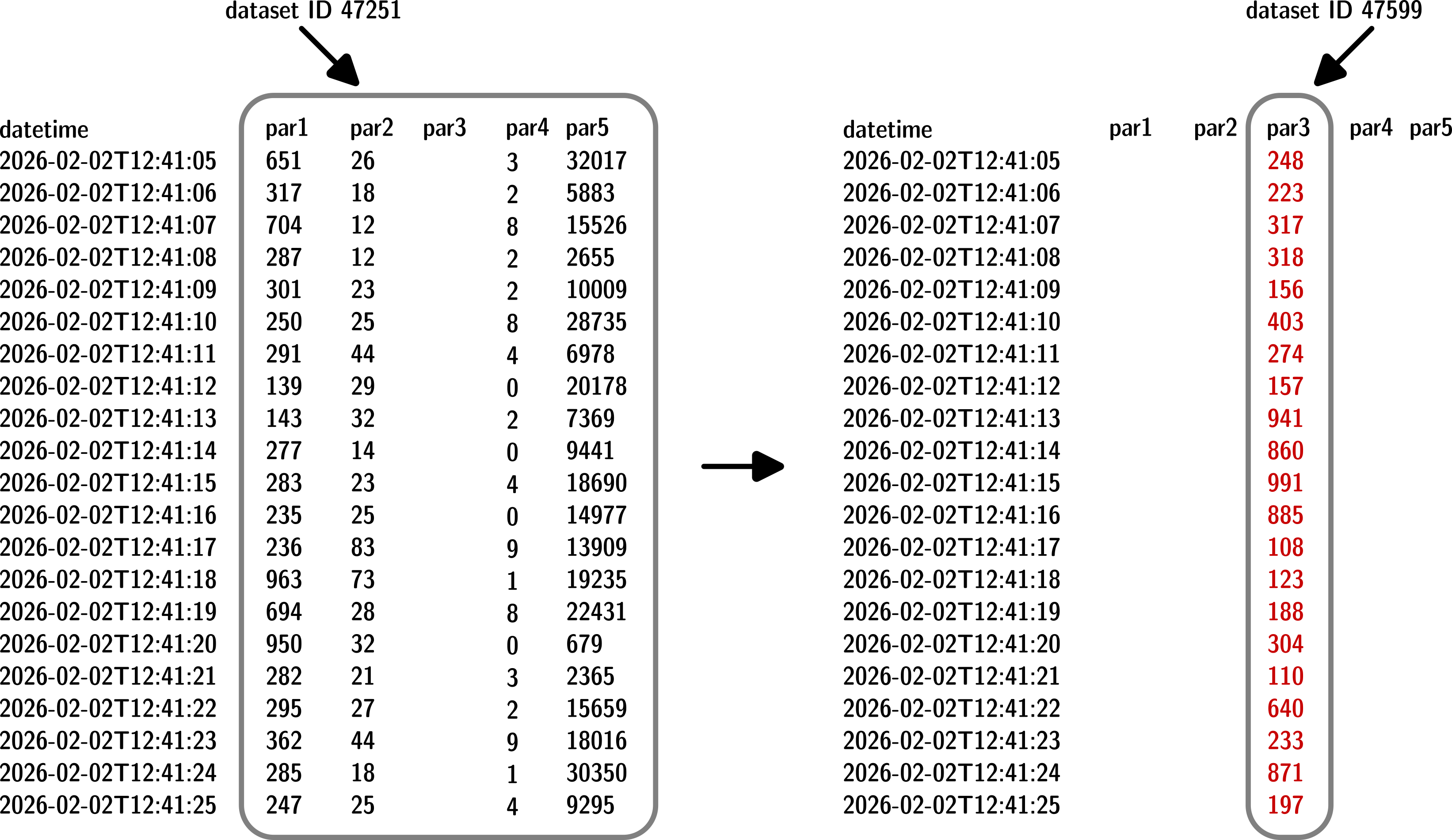

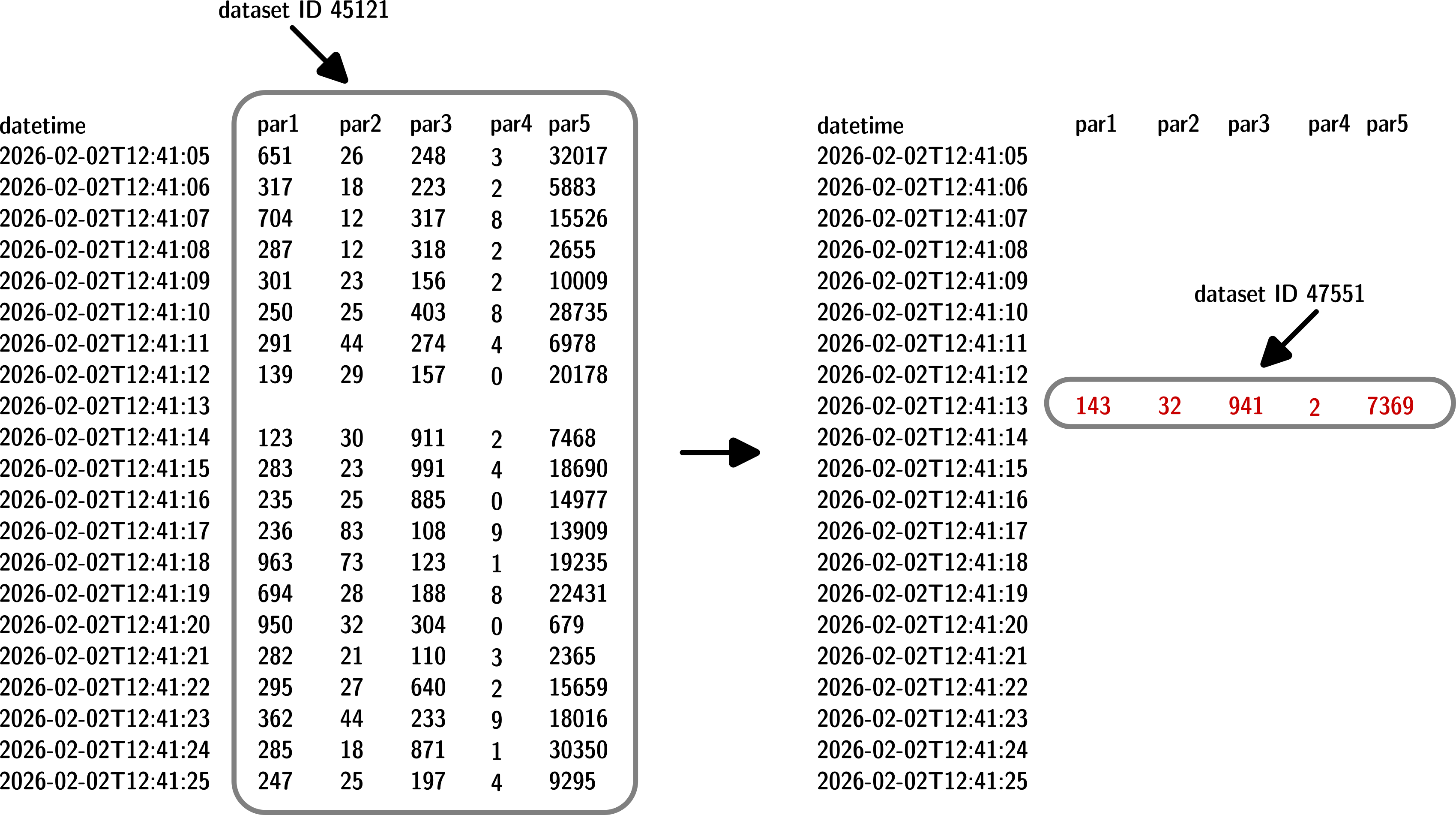

'payload': {'duplicates': [{ ... }]}}}To illustrate possible issues a schematic sketch of two situations: The dataset with ID 47251 is lacking a column. This could be due to the fact, that data of par3 is available at a later stage of processing. The missing column is ingested later and gets a unique dataset ID (47599). The second situation shows a missing row in dataset 45121. This might be due to a lacking/corrupt file during ingest. Later the gap in the datastream is closed by ingesting the lacking row (new dataset ID 47551). In both cases there is no risk to duplicate data and persistent streams of data can be retrieved.

Scheme of two possible ways to ingest: Left-hand side missing column added, right-hand side a missing row is added.

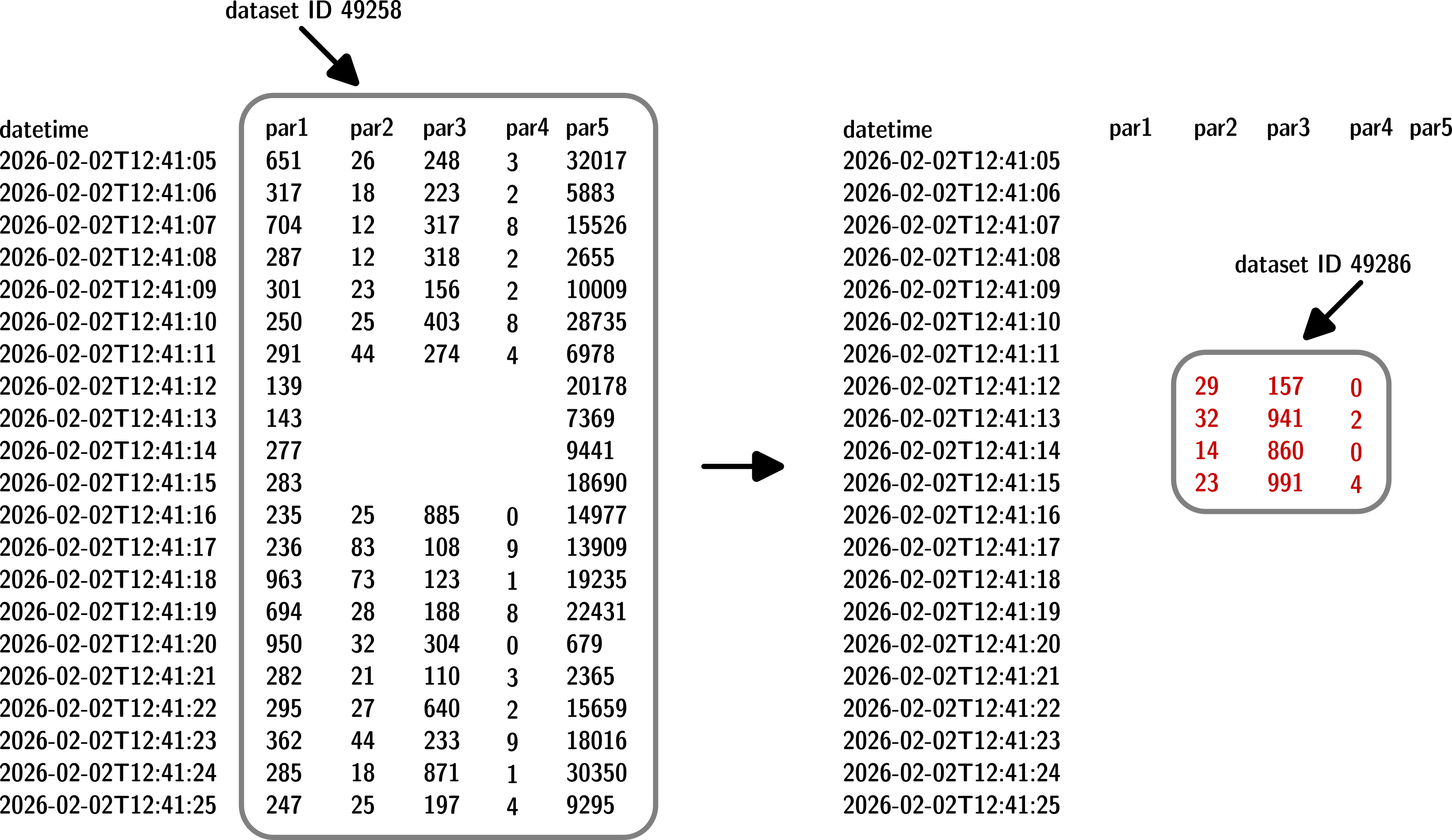

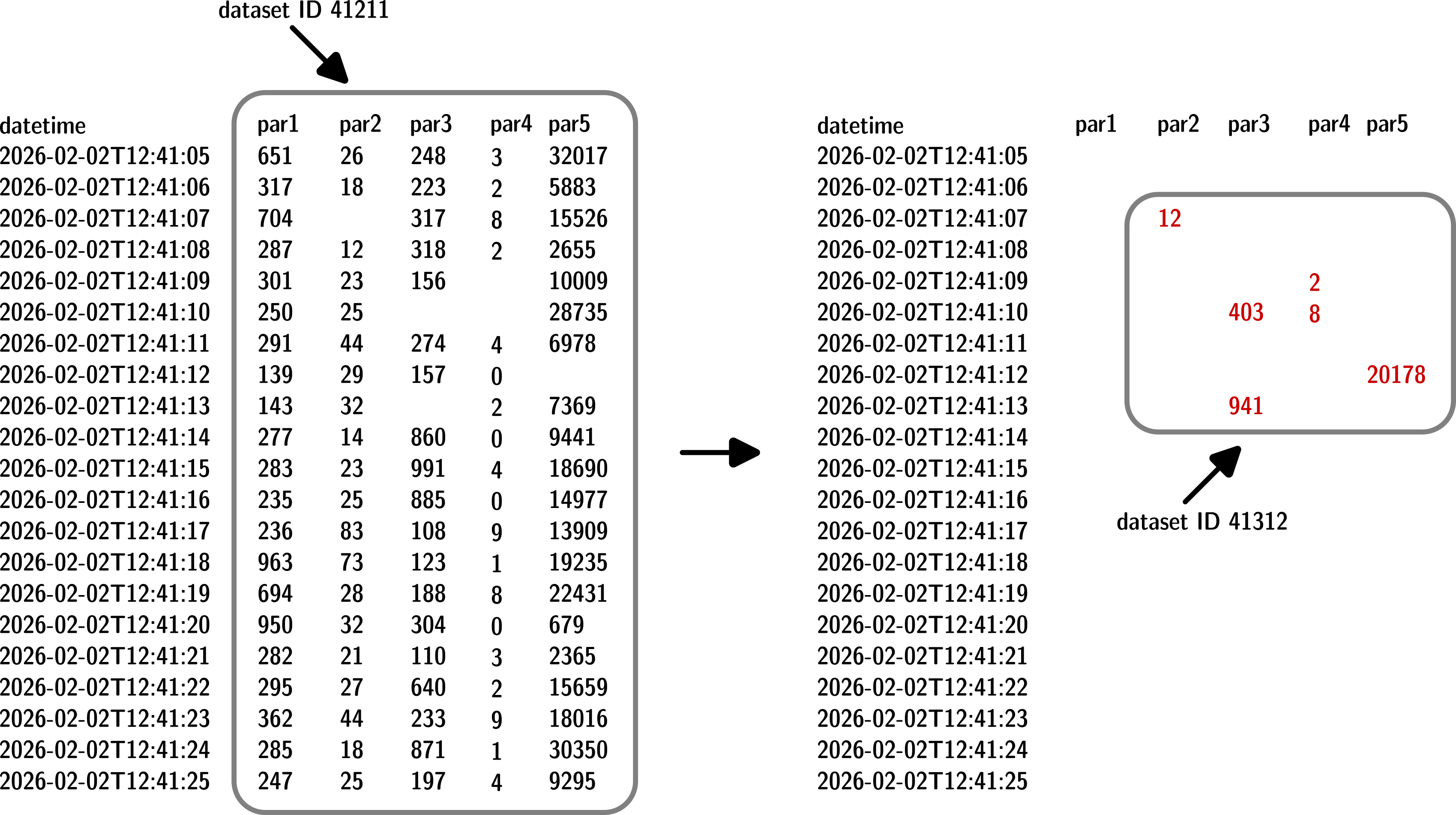

Two more use cases: For whatever reason there is a gap in dataset 49258 spanning multiple rows and columns at once. When the lacking content is ingested a new dataset (ID 49286) is created. So for par2, par3, and par3 data can be retrieved/aggregated/used in dashboards over multiple datasets without further ado. Another scenario depicts missing data in dataset 41211 across various parameters and time. So without raising errors the missing data must be ingested in the gaps of dataset 41211. The resulting dataset ID is 41312.

Another scheme of two possible ways to ingest: Left-hand side shows a coherent block of missing values, right-hand side missing data scattered across time and parameters is added.

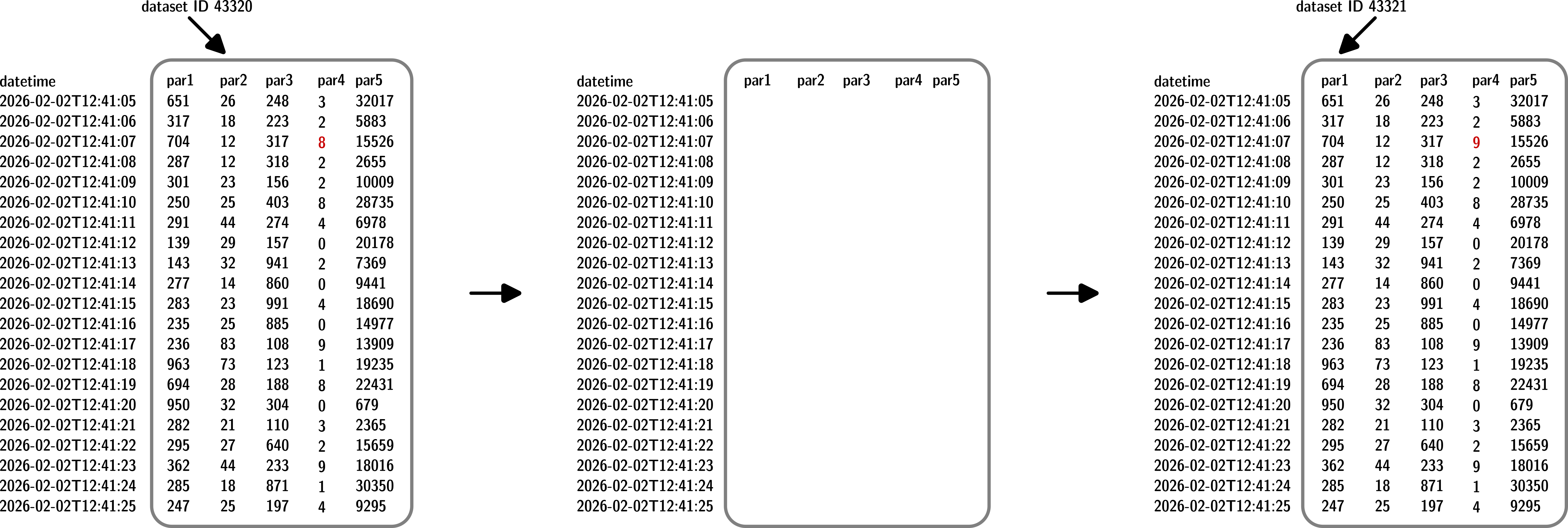

But what if there is data wrong in the database? Besides chicken-headless-mode one could just solve the issue. We have seen that data that is ingested together forms a dataset ID. So, if one value is wrong and needs to be altered there is no alternative to removing the dataset ID that incorporates the faulty value and then replace it with the correct data. It doesn't matter if there is a single value incorrect in the file or whole columns, rows, or large fractions. The procedure is always the same. If there is incorrect data that should be replaced, the old dataset ID needs to be removed in advance. Otherwise the constraints of the database are violated.

Schematic data table.

If data is formatted following the format specifications of the nrt format most causes that violate the database's constraints are omitted automatically.

Koppe, R., Immoor, S., & Anselm, N. (2019). Format specification for Near Real-Time format. doi.org/10.5281/zenodo.15783112